21 February 2025

EN 18031 Explained: What Are Privacy Assets?

The Radio Equipment Directive Delegated Act (RED DA) officially came into effect on 1 August 2025. This legislation mandates that manufacturers of internet-connected radio equipment demonstrate compliance with newly established essential cybersecurity requirements.

Over the past year, we've seen EN 18031 emerge as the primary standard for manufacturers aiming to achieve compliance with the RED DA. If your equipment processes personal information, you are required to adhere to essential requirement 3.3(e) of the RED DA and the corresponding harmonized standard, EN 18031-2, is the most effective standard to apply in achieving the requirement.

Like its counterparts, EN 18031-1 and EN 18031-3, EN 18031-2 is designed to protect assets, with a specific emphasis on privacy assets. This standard aims to enhance "the ability of radio equipment to protect its [...] privacy assets against common cybersecurity threats." It is assumed that you have already identified all privacy assets before embarking on your Technical Documentation.

However, the notion of "privacy assets" can often be challenging to comprehend. Many manufacturers convey that the definitions and explanations in EN 18031 are too vague and lack concrete examples.

In this article, we will clarify one of the most critical yet frequently misunderstood concepts in EN 18031-2: privacy assets. By the end of this article, you will gain valuable insights into:

- The official definitions of privacy assets

- Our interpretation of these definitions to provide a clearer understanding of what privacy assets truly encompass

- The thought process to adopt to identify privacy assets

- How to use open-source tools to identify privacy assets

- 27 real-life examples of privacy assets

Privacy Assets Categorization: Personal Information, Privacy Functions and Privacy Function Configurations

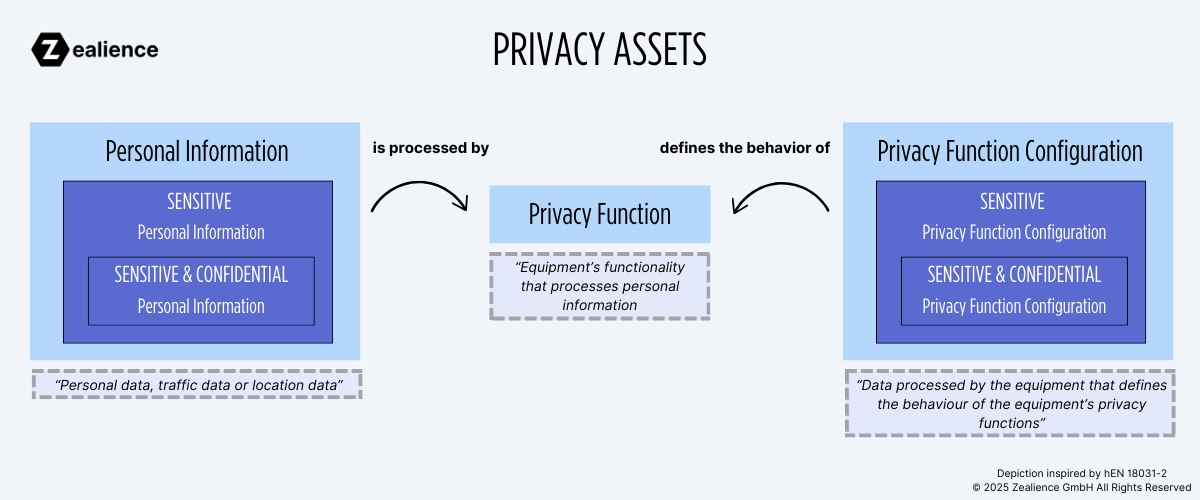

According to EN 18031-2, privacy assets comprise personal information, privacy functions, and privacy function configurations. Together, these elements work in tandem to achieve specific objectives.

As illustrated in the figure above, personal information is processed by a privacy function, while a privacy function configuration defines the behavior of that function.

Let's consider as example a home security camera that records (i.e. stores) video streams and can send backups to a cloud backend. The personal information includes the video footage and the SSID of the Wi-Fi network the camera is connected to. The privacy function involves the recording (i.e., storing) of the video stream and sending backups to the cloud. Through an app, the user of the camera has the ability to customize the sensitivity of the motion sensor to determine when the camera begins recording (motion-activated camera) and schedule when backups must be sent to the cloud. These settings are sent to the camera and stored in a config file, i.e. a privacy function configuration.

Understanding the relationship between these privacy assets enables you to grasp the broader context of how the standard works and the fundamental reasons for meeting specific requirements.

1. Personal Information

Official Definition of Personal Information

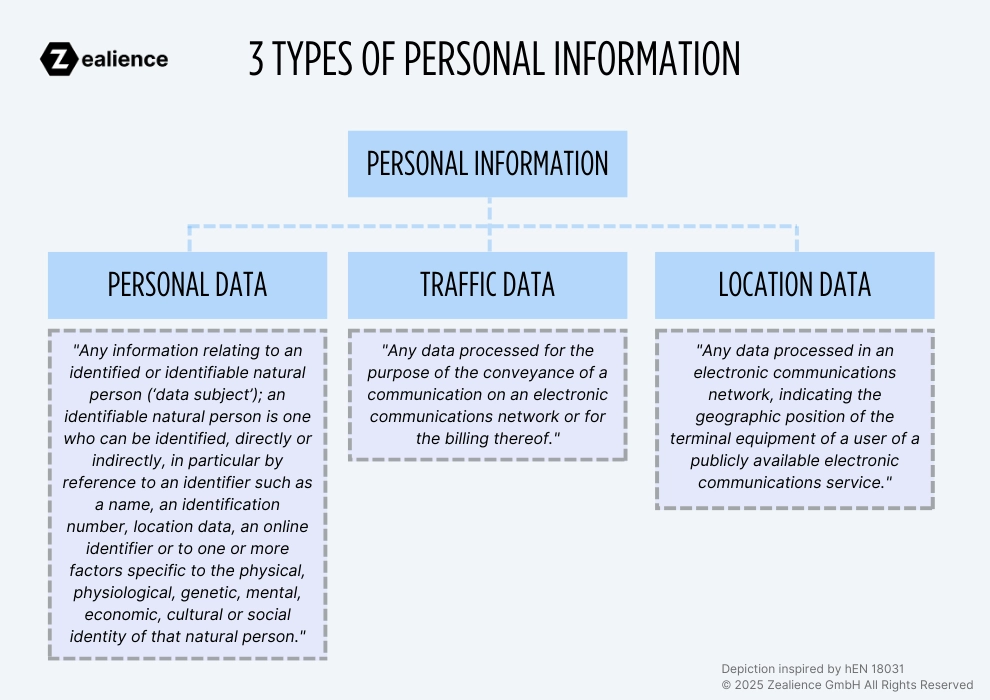

The standard EN 18031-2 defines personal information as "personal data, traffic data or location data", and refers to the EU directives where these terms are defined.

- Personal data: Defined in the Article 4(1) of Regulation (EU) 2016/679 (GDPR) as "any information relating to an identified or identifiable natural person (‘data subject'); an identifiable natural person is one who can be identified, directly or indirectly, in particular by reference to an identifier such as a name, an identification number, location data, an online identifier or to one or more factors specific to the physical, physiological, genetic, mental, economic, cultural or social identity of that natural person."

- Traffic data: Defined in Article 2, points (b) of Directive 2002/58/EC (ePrivacy Directive) as "any data processed for the purpose of the conveyance of a communication on an electronic communications network or for the billing thereof."

- Location data: Defined in Article 2, points (c) of Directive 2002/58/EC (ePrivacy Directive) as "any data processed in an electronic communications network, indicating the geographic position of the terminal equipment of a user of a publicly available electronic communications service."

Moreover, the standard requires additional authentication measures for "personal information of special categories." EN 18031-2 adopts the same definition as that of the GDPR:

- Personal Information of Special Categories: Defined as "personal information that is genetic data, biometric data for the purpose of uniquely identifying a natural person, data concerning health or data concerning a natural person's sex life or sexual orientation or that reveals racial or ethnic origin, political opinions, religious or philosophical beliefs, or trade union membership".

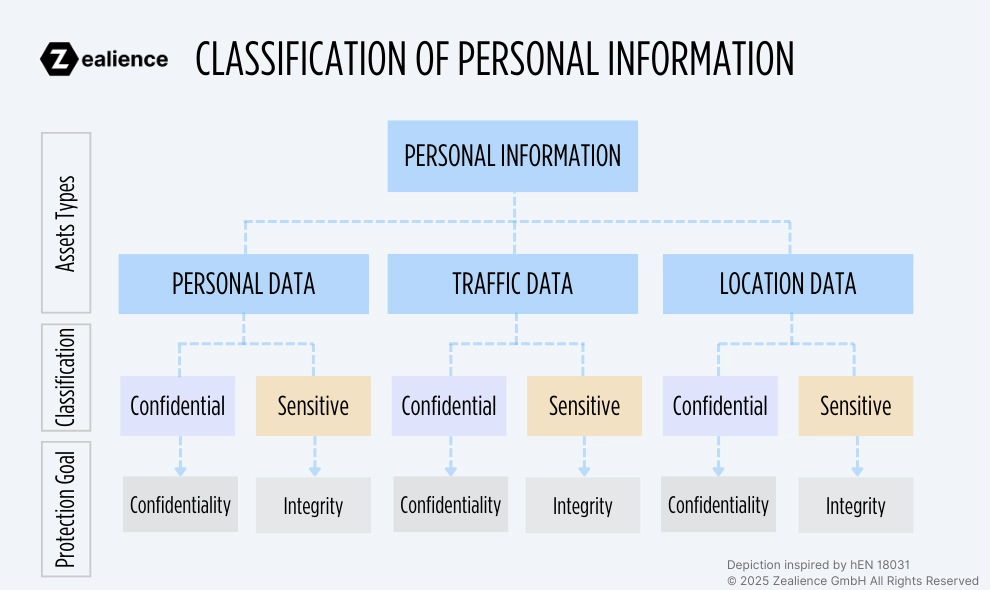

According to the standard, personal information can be classified as confidential and/or sensitive:

- Confidential personal information: Defined as "personal information whose disclosure can compromise the user's or subscriber's privacy".

- Sensitive personal information: Defined as "personal information whose disclosure can compromise the user's or subscriber's privacy".

Our Interpretation of Personal Information

To determine what constitutes personal information (i.e. personal data, traffic data and location data), it is best to refer to the legal texts mentioned above (i.e., GDPR and ePrivacy Directive). You can visit the European Commission's website for more information and examples regarding data protection and personal data: Data Protection Explained.

The Importance of Classification: Confidential and Sensitive

The classification of personal information as confidential and/or sensitive is crucial, as it determines which requirements of the standard apply to that personal information.

For example, when personal information (regardless confidential or sensitive) is stored persistently in the equipment, the storage mechanism must protect its integrity (requirement SSM-2). When confidential personal information is persistently stored, the storage mechanism needs also to protect its confidentiality (requirement SSM-3).

This also means that it is not possible to identify the applicable requirements of the standard without having first identified the privacy assets.

The type of personal information processed by the equipment can also dictate the requirements to apply: When the primary intended functionality of the equipment consists of processing personal information of special categories, two-factor authentication must be implemented (requirement AUM-2-2). Typical examples of such equipment include smart health/fitness trackers.

How To Classify of Personal Information: Confidential vs. Sensitive

In the context of EN 18031-2, personal information (i.e., personal data, traffic data and location data) can be confidential, meaning that its confidentiality needs to be protected, and/or it can be sensitive, meaning that its integrity needs to be protected (see Figure above).

While EN 18031-2 mentions that personal information can be sensitive and/or confidential (see Annex A of the standard), we consider that it should be either sensitive, or confidential AND sensitive. This excludes the possibility to have personal information that is only confidential: In light of the security objectives to be met, we believe that if confidentiality needs to be protected, then integrity must also be safeguarded.

Watch out: The term 'sensitive' has a different meaning under the GDPR. This regulation defines "special categories of personal data" as "sensitive data". In the context of EN 18031-2, keep in mind that the terms "sensitive" and "confidential" relate to the security objectives to be met.

Although the topic of personal information is complex, we recommend the following simple rule of thumb:

- Personal data should be classified as both confidential and sensitive in almost all cases. This classification emphasizes the necessity of protecting its confidentiality and integrity. This approach is aligned with the GDPR: As stated in Art. 5. 1 (f), "personal data shall be processed in a manner that ensures appropriate security of the personal data, including protection against unauthorised or unlawful processing and against accidental loss, destruction or damage, using appropriate technical or organisational measures ('integrity and confidentiality')."

- Traffic data should be classified as confidential and sensitive in nearly all cases. The Article 5 of the ePrivacy directive mandates that "Member States shall ensure the confidentiality of communications and the related traffic data".

- Location data: It is best to refer to the ePrivacy directive and evaluate the protection goals to be met.

How To Identify Personal Information

To identify the personal information to be documented, it can be helpful to start by identifying the two main sources of personal information: User interfaces/inputs and external sensors.

An equipment can provide various user interfaces, allowing the user to input personal information to the equipment. For example, the equipment can have a web application through which the user can register an account. The information collected can include email address, full name and phone number.

A number of external sensors can be present in the device to fulfill various use cases. For example, if a microphone is available, the audio input stream may need to be documented as personal information. Similarly, if a camera or a GPS module is present, photo/video streams and location data may need to be documented. (If you want to learn about external sensors in the context of EN 18031, you can read our article on External Interfaces).

Finding Personal Information with Open-Source Tools

We can speed up the identification of personal information by leveraging Bearer CLI, an open-source application scanner. It can "detect sensitive data flow such as the use of PII, PHI in your app, and components processing sensitive data (e.g., databases like pgSQL, third-party APIs such as OpenAI, Sentry, etc.)", before generating a privacy report. Some of the personal information it can identify include phone numbers, device identifier, date of birth, ID number, GPS coordinate and much more.

Note: Bearer CLI currently supports the following programming languages: JavaScript/TypeScript, Ruby, PHP, Java, Go, Python. This makes it well-suited to scan typical applications on an equipment that may process personal information such as a web application.

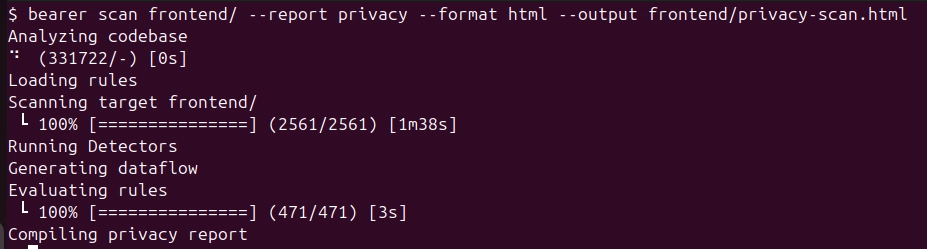

Let's use Bearer CLI to identify personal information the front-end application of the popular open-source home automation project Home Assistant. After installing Bearer CLI, clone the repo of Home Assistant's front-end. You will have a folder called "frontend/" containing the code base and resources of the application. You can execute a scan and generate a privacy report with the following command:

Terminal

bearer scan frontend/ --report privacy --format html --output frontend/privacy-scan.htmlIf successful, you should get a message like below:

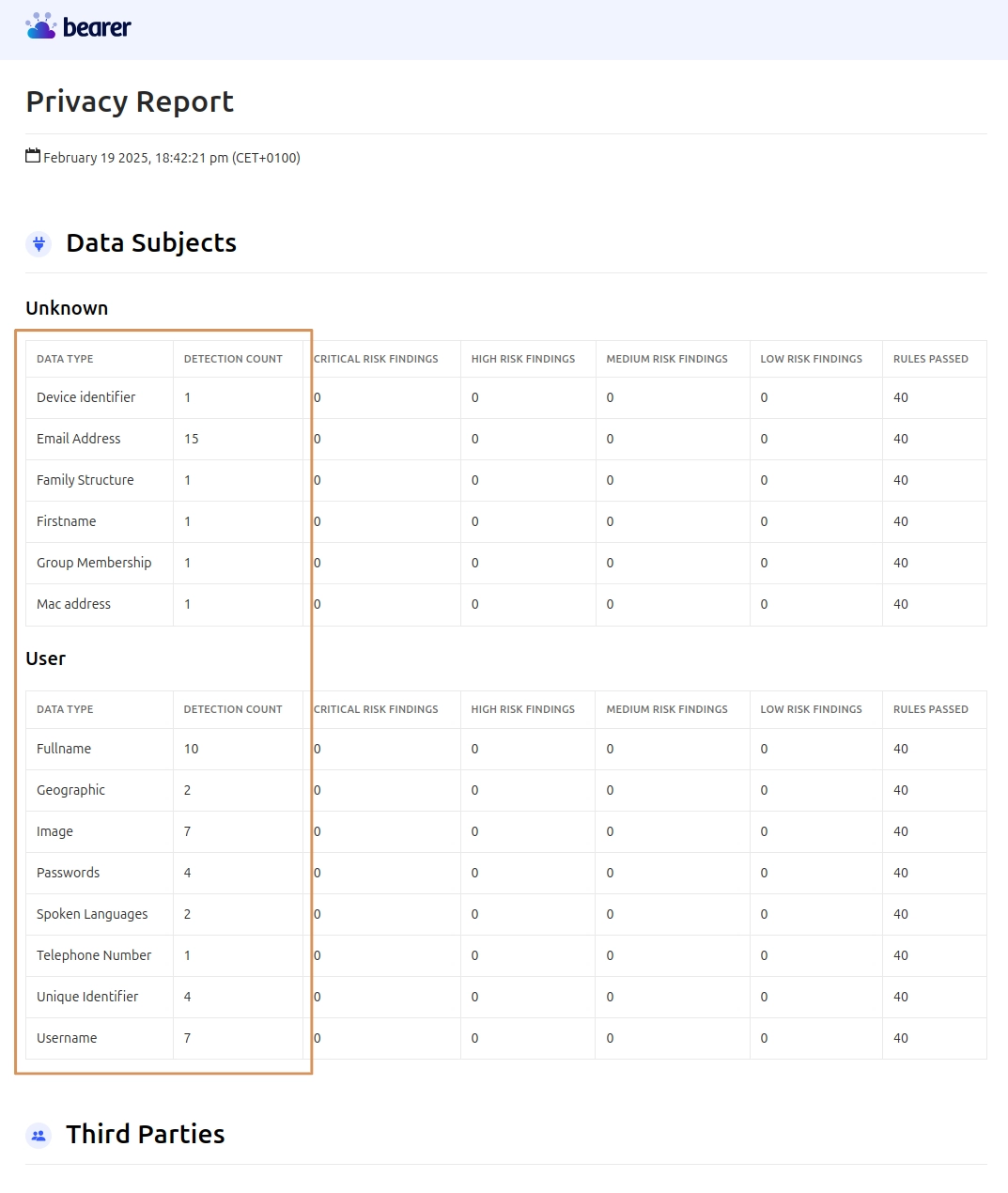

After a couple of minutes, you can open the file entitled "privacy-scan.html" in the frontend/ folder. As shown in the screenshot below, Bearer CLI indicates a number of personal information processed by the application, including device identifier, email address and MAC address. For each information, there is also an indication of how many times it has been detected in the application. The information found is grouped by data subjects. In our example, some of the data found is grouped under the standard User subject. The remaining data which did not match a specific subject is grouped under Unknown. Refer to the official documentation for more details.

Tip: Think Ahead and Identify Privacy Functions Simultaneously

The standard states that personal information is processed by a privacy function. Therefore, when identifying personal information, we recommend also identifying privacy functions that process it.

Consider the example mentioned above of an equipment with a web application that allows the user to register a user account from the web interface. The personal information collected consists of an email address, full name and phone number, which are then sent to a cloud backend. In this example, the personal information processed/transmitted should be documented (either individually or as a single group of assets, see Note below) and the registration functionality of the web application should be declared as a privacy function.

📝Note: EN 18031-2 does not "determine the granularity of the documentation concerning […] privacy assets. A suitable granularity with respect to efforts in documentation can consider common access paths to and access control mechanisms of (groups of) specific assets. For example, sensitive security parameters, which are only accessible via a specific API that makes use of a specific access control mechanism can be grouped together."

Examples of Personal Information

- Name

- Email address

- Home address

- IP address

- Video stream

- Pictures

- GPS location

- Surrounding Wi-Fi networks' SSID names

ETSI TS 103 701 provides the following examples of personal data:

- Log data on the usage of the device

- Timestamped location data

- Audio input stream

- Biometric data

2. Privacy Functions

Official Definition of Privacy Functions

The standard EN 18031-2 defines a privacy function as an "equipment's functionality that processes personal information".

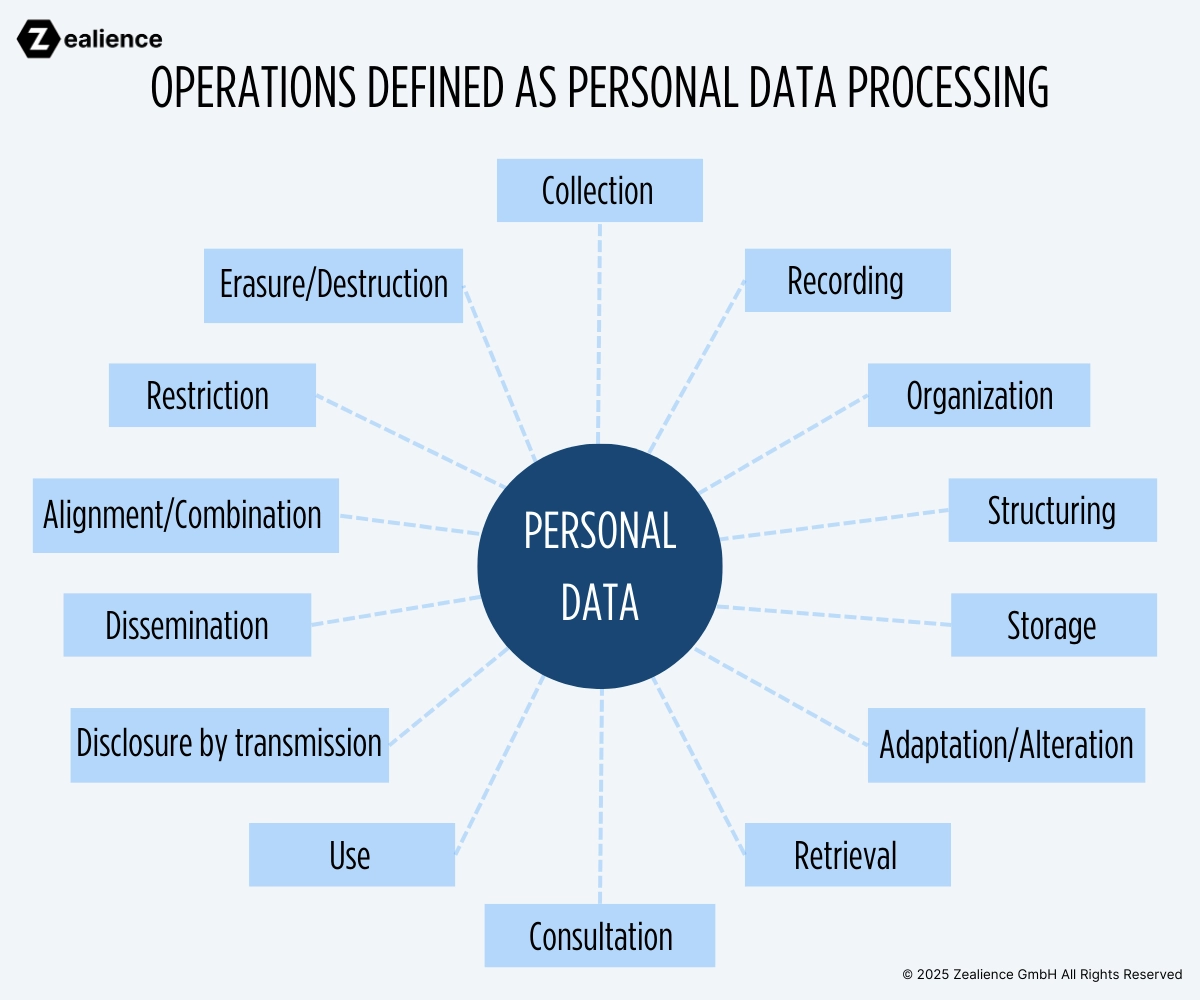

The processing of personal data is defined in the Article 4(2) of Regulation (EU) 2016/679 (GDPR) as "any operation or set of operations which is performed on personal data or on sets of personal data, whether or not by automated means, such as collection, recording, organization, structuring, storage, adaptation or alteration, retrieval, consultation, use, disclosure by transmission, dissemination or otherwise making available, alignment or combination, restriction, erasure or destruction".

Our Interpretation of Privacy Functions

By combining the definitions above, we interpret that any functions in a radio equipment performing one or more operations depicted in the figure below is regarded as a privacy function. When documenting privacy functions, the key term to keep in mind is "personal data processing".

For more information and examples about personal data processing, you can visit the European Commission's website: Data Protection Explained.

Some examples of personal data processing listed on the website include:

- "Accessing or consulting a contacts database containing personal data"

- "Posting a photo of a person on a website"

- "Storing IP addresses or MAC addresses"

- "Video recording (CCTV)"

In the light of the examples above, we interpret that any application (or part of an application) capable of performing such processing operations can be considered privacy functions. In the context of EN 18031-2 requirements, operations such as recording, storing, consultation, and transmission are particularly relevant as they require specific security mechanisms to be in place, such as secure storage mechanisms (SSM), access control & authentication mechanisms (ACM, AUM), and secure communication mechanisms (SCM).

For example, the recording (i.e., storing) of the video stream of a home security camera should be documented as a privacy function. A function enabling the transmission of the stream or recorded footages to a cloud backend should be declared accordingly.

While a privacy function can be stand-alone applications, it can also be a functionality of another application. For example, a web server (i.e., considered a network function under EN 18031-1) can have a user registration page processing personal information. This user registration functionality can be regarded as a privacy function.

It can ease the documentation effort to decompose certain applications into relevant functions. For instance, when applying EN 18031-1, -2 and -3 for an equipment, a single application can actually be composed of security functions, network functions and privacy functions. The standards do not impose a level of granularity regarding the decomposition into functions. It is best to always refer to the definitions of the standards to discern what constitutes a privacy function in the context of another application.

How To Identify Privacy Functions

The same methodology used to identify personal information can be applied to identify privacy functions, as they can often be identified simultaneously. We recommend starting by identifying user interfaces/inputs and external sensors on your device, followed by determining the personal information collected and the privacy functions implemented to process that information. For each function, ask yourself which of the above processing operation(s) it performs.

Examples of Privacy Functions

Examples provided in EN 18031-2 in Annex A:

- "An implementation to record the GPS-track of the user"

- "An Emailing functionality storing the name and email address of the user."

Other examples include:

- Software to record voice memo

- A web application's functionality handling user registration

- User consent management

- Data anonymization function

- Biometrics processing for authentication

3. Privacy Function Configurations

Official Definition of Privacy Function Configurations

The standard EN 18031-2 defines privacy function configuration as "data processed by the equipment that defines the behavior of the equipment's privacy functions"

Privacy function configuration can be confidential and/or sensitive:

- Confidential privacy function configuration is defined as "privacy function configuration whose disclosure can compromise the user's or subscriber's privacy".

- Sensitive privacy function configuration is defined as "privacy function configuration whose manipulation can compromise the user's or subscriber's privacy".

Our Interpretation of Privacy Function Configurations

Privacy function configurations are used to configure privacy functions in various ways to fulfil a specific use case(s). Since we explained above that a privacy function could be an application or a part of an application on the equipment, a privacy function configuration can be a file containing options/parameters which will be processed by that application to define its behavior. Certain privacy functions can also be configured by the user through a user interface.

Let's consider as example an application which collects user activity logs. These logs contain personal information and are sent to a cloud backend. This application relies on a config file, in which a variable can be set to enable or disable the transmission of logs. This config file can be documented as privacy function configuration.

Privacy function configurations can be confidential, meaning that their confidentiality needs to be protected, and/or they can be sensitive, meaning that their integrity needs to be protected.

The Importance of Classification: Confidential vs. Sensitive

Similarly to personal information, the classification of privacy function configurations as confidential and/or sensitive is crucial, as it dictates which specific requirements from the standard becomes applicable. For example, when confidential privacy function configurations are persistently stored in the equipment, their storage mechanism needs to protect their confidentiality (SSM-3). Therefore, it is essential to classify these assets appropriately when identifying them.

While hEN 18031-2 mentions that a privacy function configuration can be sensitive and/or confidential (see Annex A of the standard), we consider that it should be either sensitive or confidential AND sensitive. This excludes the possibility to have a configuration that is only confidential: In light of the security objectives that must be met, we believe that if confidentiality needs to be protected, then integrity must also be safeguarded.

How To Identify Privacy Function Configurations

In our previous article covering network assets, we explained how to identify network function configurations using the firmware analyzer EMBA. A similar approach can be taken to identify privacy function configuration used to configure applications processing personal information.

Examples of Privacy Function Configurations

- A user accesses the home security camera's web application to start/stop recording

- The data retention duration is set by the user

- The user registers a new set of fingerprints via the fingerprint reader

- Settings that allow users to choose their preferred method of receiving notifications

Ready To Start Your hEN 18031 Compliance?

You can download our free and open-source Technical Documentation templates from our GitHub repository. If you have questions about its usage, simply shoot us a line!

- EN 18031-1, -2 and -3 Technical Documentation template

- EN 18031-1, -2 and -3 Test plan template

Author of This Article ✏️

Dr. Guillaume Dupont is a co-founder of Zealience. He holds a PhD in IoT cybersecurity. As a former Senior Security Expert at UL Solutions, he helped IoT manufacturers prepare for the RED DA by performing evaluations against product security standards such as ETSI EN 303 645 and IEC 62443-4-2. He has contributed to the drafting of EN 18031 and also trained a Notified Body for RED DA assessments. He previously worked at Forescout on automotive security and developed intrusion detection systems for in-vehicle networks. He is also a seasoned IoT vulnerability researcher and disclosed CVEs found in medical devices to Siemens Healthineers. His research on IoT security led him to obtain a US patent: He invented a novel approach to enhance the accuracy of IoT device classification leveraging machine learning algorithms (US20220353153).